The purpose of this lab is to build a 2D map of a static room. The "room" is set up in the front room of the lab with cardboard boxes and wood boards. The robot will be manually placed on multiple spots of the room, and it will collect distance readings (from ToF sensor) when turning around in place. Data will be merged together to create the map of the whole room. At the end, a line-based map will be created from the merged map.

PID control for turning

At this point, the robot has already been wired up with ToF sensors, an IMU, and motor drivers connected to the motors. Hardware-wise, the robot is capable of performing all the tasks in this lab. All the work that is left to do is software programming.

The first task is to have the robot turning around in place in small, accureate increments. Later on, the robot will be logging the ToF sensor reading and the gyroscope accumulated orientation at each increment. Because I have completed task B in the PID lab, PID control of turning is simply a matter of slowly incrementing the set point. The simple code snippet attached below shows clearly how this is done.

case mapping:

// get current orientation

current_orientation = my_imu.get_ang().yaw_gyr;

// pid compute

orientation_controller.compute();

// feed updated turn speed into the motors

my_motors.car_turn(turn_speed, -turn_speed);

// increment the set orientation

if (delay_nonblocking(50)) set_orientation += 3;

Code is simple, and it works well. There is a 50 ms non-blocking delay between each 3-degree increment to simulate the sampling time of the ToF sensor.

Confirm the performance of PID controller

The performance of the PID controller can be confirmed by combining the two methods below:

- Maintaining an array of the latest 10 differences data between the set point and the current orientation. Only increment the set point when all elements in the array are less than the step size.

- Have the robot turning in place and visually confirm that it is not making progress too slow, not oscillating, and it is not stuck.

The first method can be realized by the code snippet below.

// maintain lookback array

orientation_difference[lookback_idx++] = abs(set_orientation - current_orientation);

if (lookback_idx == lookback_samples)

lookback_idx = 0;

max_diff = orientation_difference.find_max();

if (max_diff less than step_size) set_orientation += step_size;

The video below shows the second confirmation. The robot is turning in place without much shifting. It is making progress pretty quickly (120 data points collected for ~30s) even with orientation checking. It does not have much oscillation or overshooting, meaning that my PID control is working well.

PID orientation control with robot turning in place.PID parameters

I am using the following PID parameters:

double kp = 30;

double ki = 2e-3;

double kd = 25;

These parameters are very close to what I used in Lab 6 by following the two heuristics the instructor discussed in lecture. kp and kd are tuned lower to further avoid overshooting because the robot in this lab is supposed to turn much slower than that in Lab 6.

Obstacles setup and data collection

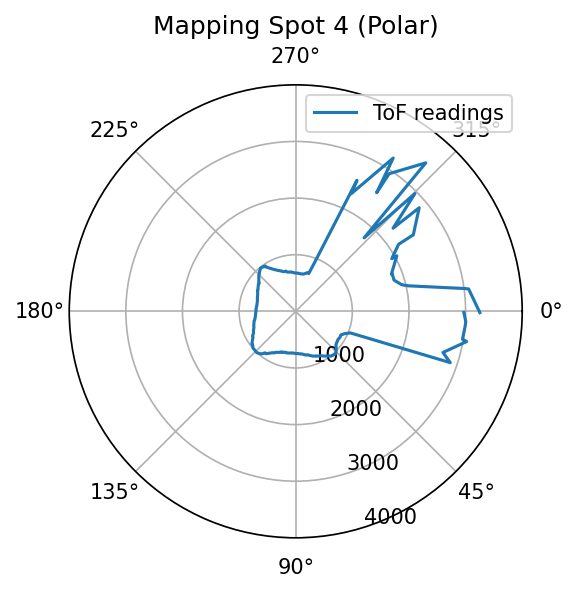

The map below shows the physical setup of the "room."

Physical setup of the map and obstacles.

Physical setup of the map and obstacles.

The robot will be collecting data in designated spots. The spots are marked with white tape with their corresponding coordinates. There is also a spot corresponding to the origin. The picture below shows one such spot with the coordinate (5, -3).

Picture of a label. Robot will be turning in place on these labeled spots and collect mapping data.

Picture of a label. Robot will be turning in place on these labeled spots and collect mapping data.

From the programming aspect, there are minimum changes made. The robot would take a time of flight measurement when the robot is close enough to the set point. It logs down the distance measurement as well as the gyroscope calculated yaw angle. Then it changes the set point and repeats untill it has turned over 360 degrees. After that, the robot sends back both arrays to my laptop for data visualization with the help of my debugging scripts. The video below shows my robot collecting data in the lab in one of the spots.

My robot collecting data in the map.Post-processing: plotting data

Post-processing: plotting data

Because I am using orientation PID control, I will need to trust the orientation from integrated gyroscope values. The robot's starting orientations are different for different spots. They are plotted with the same viewer's orientation to correspond to the map during sanity checking. When we are doing data processing later, an offset to the gyroscope angle can be easily added to make those orientations aligned.

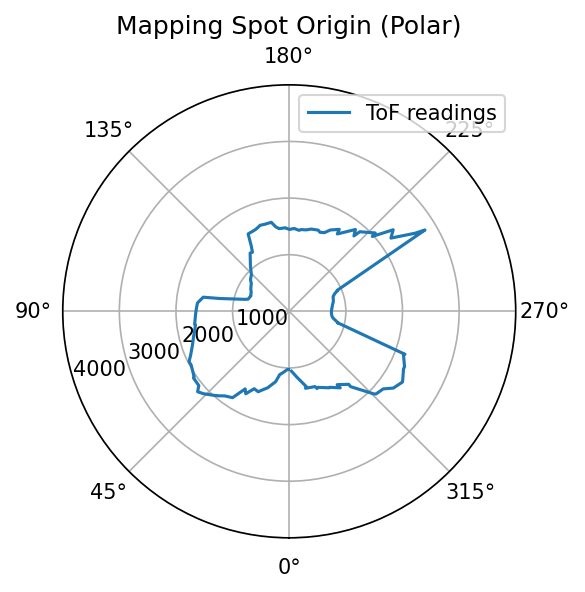

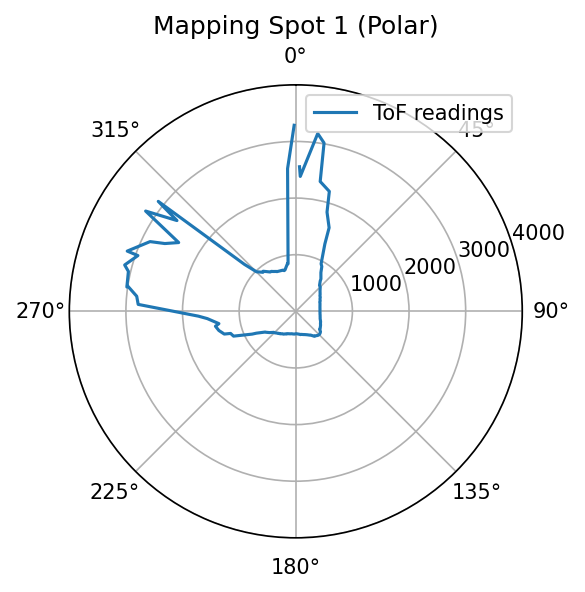

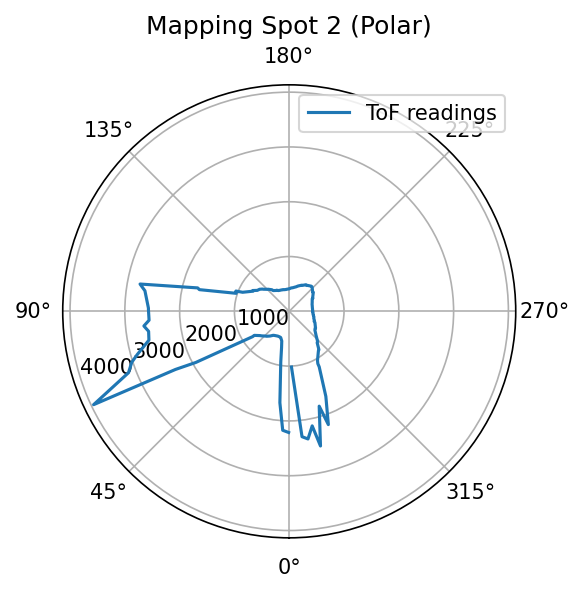

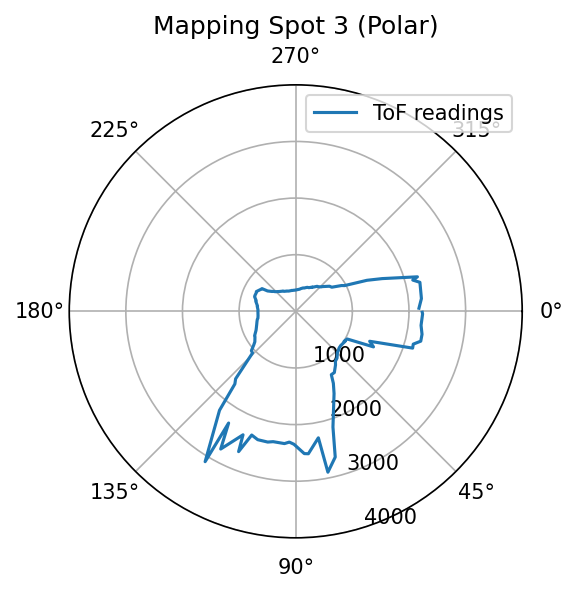

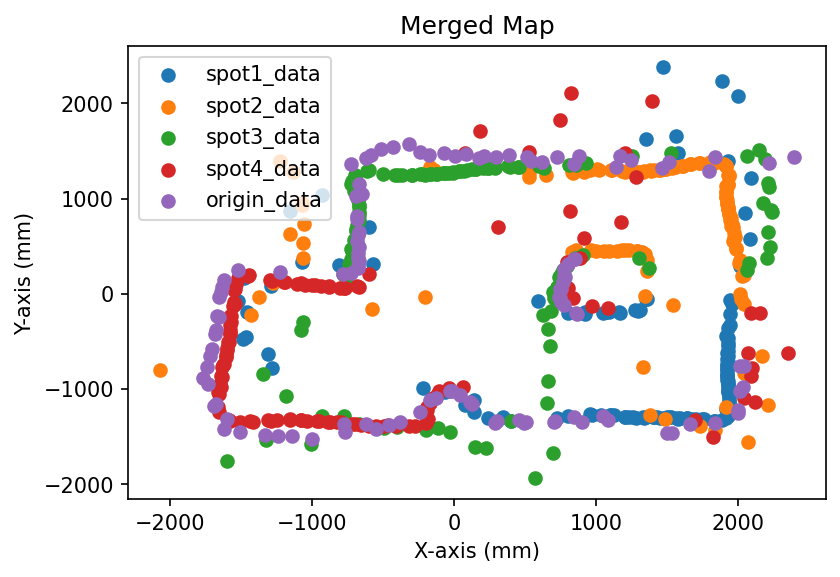

Because the robot is turning in place and the distance to wall is measured, it will be good to do quick visualizations by making polar plots for sanity checking. Pictures below shows both polar plots for each spot where measurements are taken, and a labeled picture of the map to show where each spot is located as well as their coordinates.

Polar plots at each spot. The viewing orientations are aligned.

Polar plots at each spot. The viewing orientations are aligned.

Real map set up with labels to show where the spots are.

Real map set up with labels to show where the spots are.

Sanity checking shows better than expected consistency between the measured polar plot and the real-world setup. This gives me great confidence to proceed in this lab.

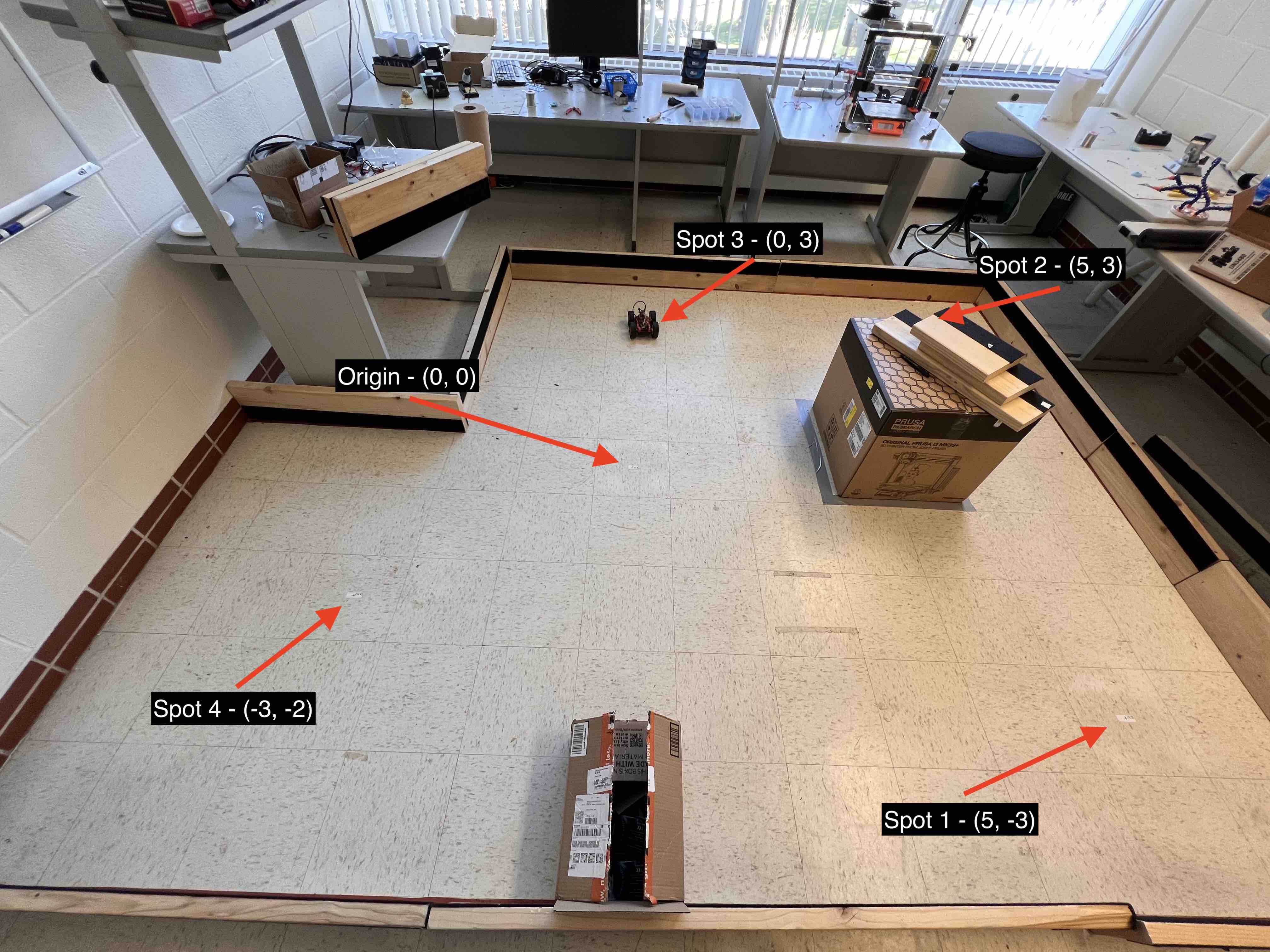

Post-processing: transformation and merging

Orientation alignment

Because the starting orientation of the robot is different in different spots, I need to add offsets to the angle data for each spot such that they are aligned. Luckily, the offset needed is already figured out in the sanity checking stage. What is left is to add an offset to the angle data array.

Converting from polar coordinates to cartesian coordinates

Data collected by the robot includes the orientation of the robot and the distance it measures. Essentially, this is in polar coordinate (rho, phi). We first need to convert the coordinates into cartesian coordinates. The code snippet below will do the job.

def pol2cart(rho, phi):

x = rho * np.cos(phi)

y = rho * np.sin(phi)

return(x, y)

Transformation to account for the offset

At this point, we have the "wall data points" in Cartesian coordinate with the correct orientation. We now need to account for offsets. Each set of data points are taken in different spot, and we need to account for the spots' offset with respect to the origin.

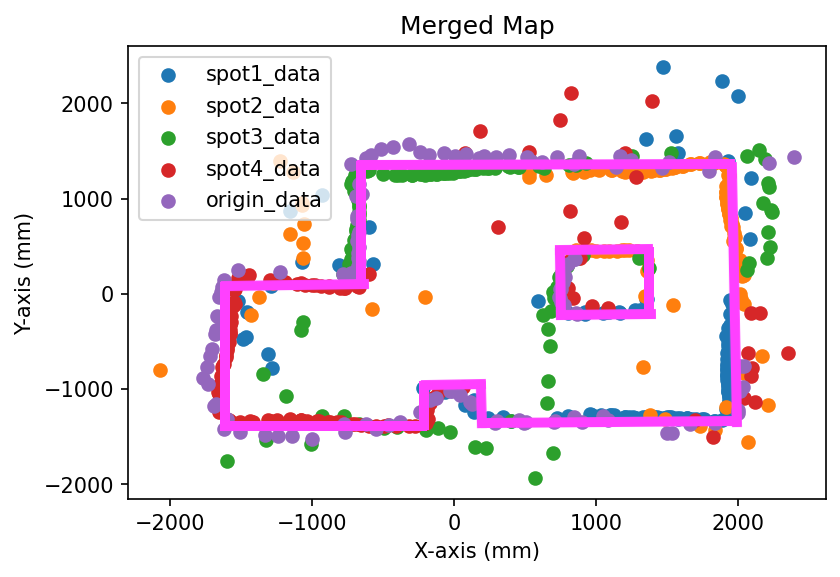

For each data point, the transformation needed to perform is simple because there is no rotation, only translation. Therefore, the transformation matrix would be A = [1, 0, off_x; 0, 1, off_y; 0, 0, 1], where (off_x, off_y) is the coordinate of the spot where measurements are taken. However, directly applying the offsets to the x, y data arrays seem to be a much easier and straight-forward implementation. As an engineer, that's what I did, and the result (the picture below) looks good.

Merged map based on robot measurements in different spots.

Merged map based on robot measurements in different spots.

Line-based map

A line-based map is created to make modeling easier. This is done manually by drawing lines on the merged map and reading out the start and end points of the lines. The line-based map imposed on the merged map is shown below.

Line-based map.

Line-based map.

Here are the coordinates.

| Description | Start coordinate (mm) | End coordinate (mm) |

|---|---|---|

| South walls | (-1600, -1400) |

(-200, -1400) |

(-200, -1400) |

(-200, -1000) |

|

(-200, -1000) |

(200, -1400) |

|

(200, -1000) |

(200, -1400) |

|

(200, -1400) |

(2000, -1400) |

|

| East wall | (2000, -1400) |

(2000, 1400) |

| North wall | (-800, 1400) |

(2000, 1400) |

| West walls | (-800, 1400) |

(-800, 0) |

(-1600, 0) |

(-800, 0) |

|

(-1600, 0) |

(-1600, -1400) |

|

| Isolated box | (1400, 0) |

(1400, 500) |

(1400, 0) |

(800, 0) |

|

(800, 0) |

(800, 500) |

|

(800, 500) |

(1400, 500) |